How does it work?

The Function Call

1

1.) Standardize all Features

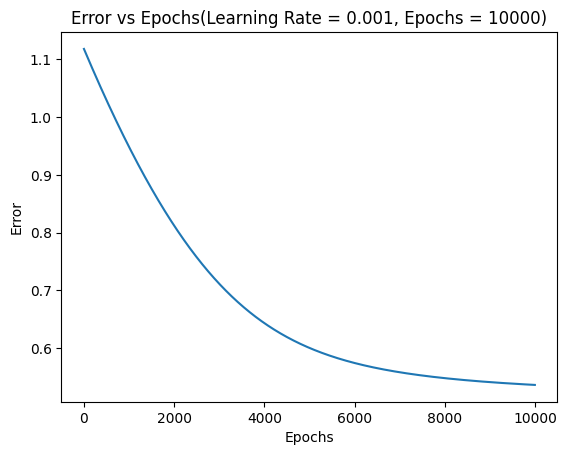

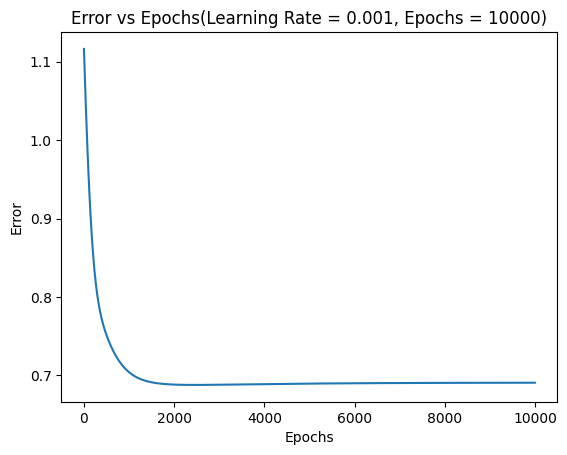

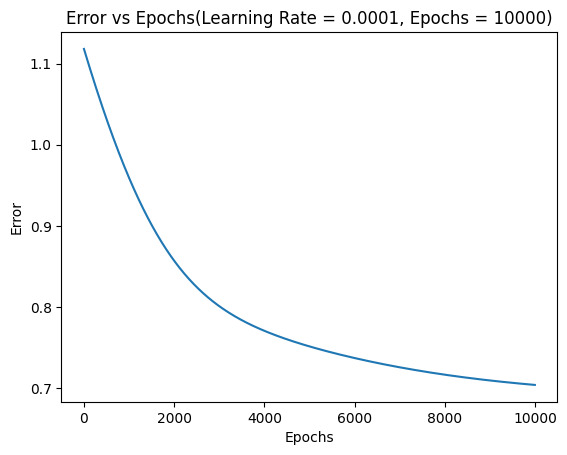

2.) Each weight (9 weights + bias) is initialized randomly and we calculate sigmoid(x) for each sample

3.) Calculate the error between the actual value and the predicted value (when inputted into sigmoid function), this is repeated for each sample and averaged.

4.) Use the Update rule to update each weight

2

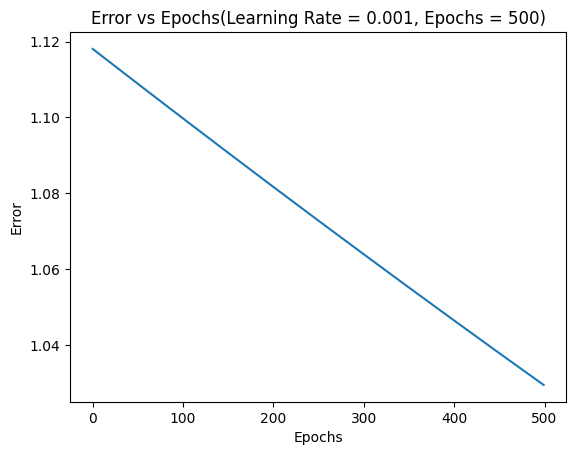

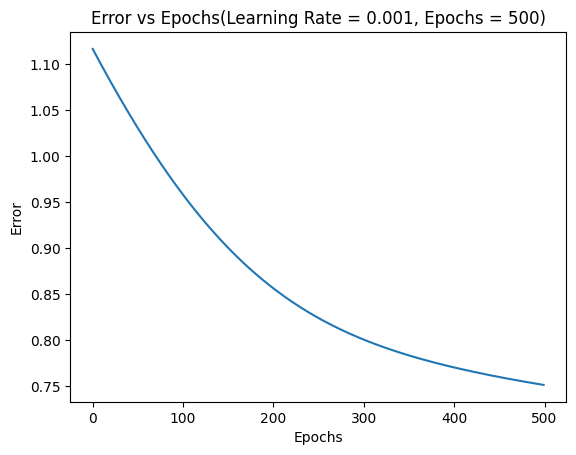

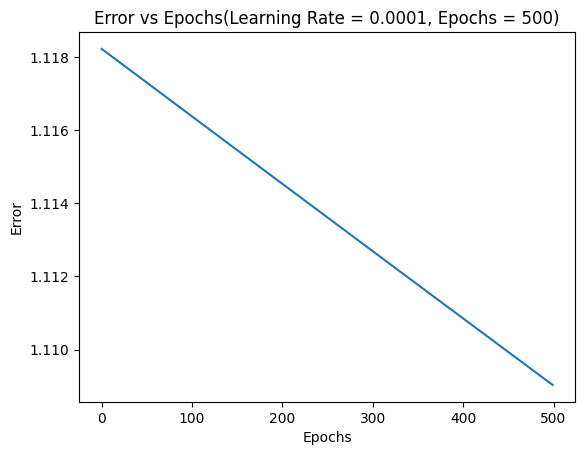

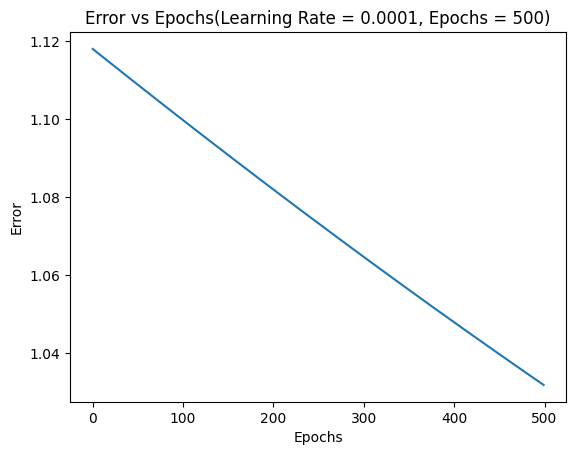

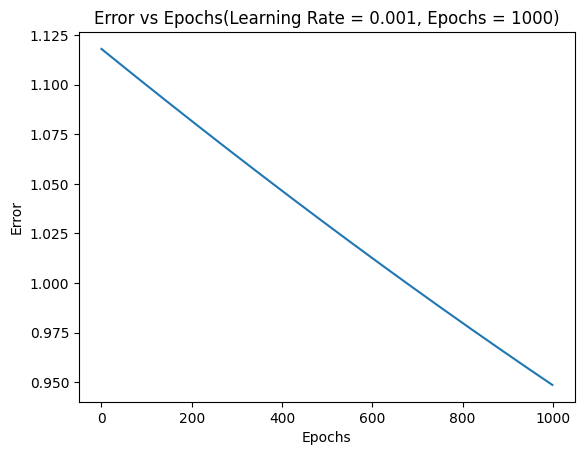

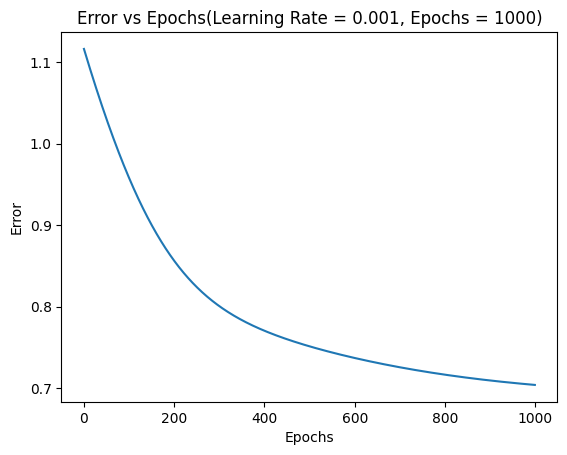

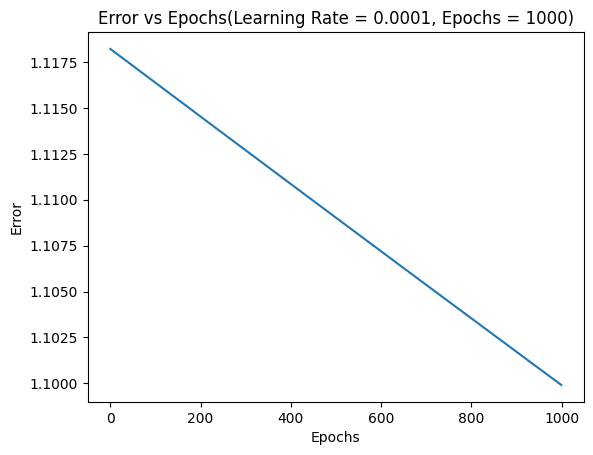

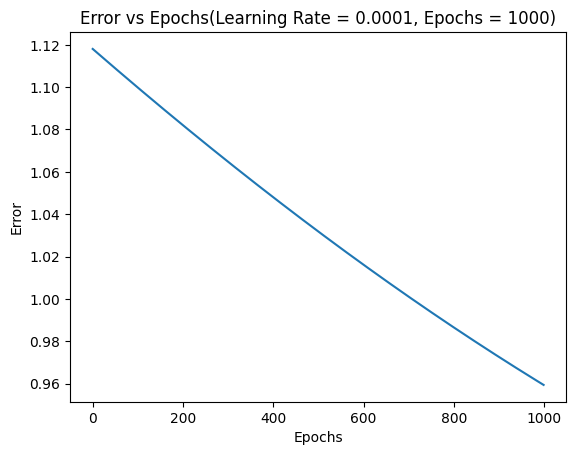

After each Iteration of the gradient descent algorithm we calculate the error using this cost function which is essentially the actual value subtracted from the predicted value

3

To Update the weights, we need to apply the update rule along with its learning rate as seen below.

We need to take a partial derivative of the error function with respect to each individual weight (theta).